Differences

This shows you the differences between two versions of the page.

| Both sides previous revisionPrevious revisionNext revision | Previous revisionLast revisionBoth sides next revision | ||

| teaching:gsoc2014 [2014/02/25 14:04] – [Topic 2: CRAM -- Symbolic Reasoning Tools with Bullet] tenorth | teaching:gsoc2014 [2014/07/08 11:23] – [KnowRob -- Robot Knowledge Processing] tenorth | ||

|---|---|---|---|

| Line 9: | Line 9: | ||

| are available under BSD license, and partly (L)GPL. | are available under BSD license, and partly (L)GPL. | ||

| + | If you are interested in working on a topic and meet its general criteria, you should have a look at the [[teaching: | ||

| ===== KnowRob -- Robot Knowledge Processing ===== | ===== KnowRob -- Robot Knowledge Processing ===== | ||

| Line 25: | Line 26: | ||

| of applications, | of applications, | ||

| describing multi-robot search-and-rescue tasks (SHERPA), assisting elderly | describing multi-robot search-and-rescue tasks (SHERPA), assisting elderly | ||

| - | people in their homes (SRS) to industrial assembly tasks (SMERobotics). | + | people in their homes (SRS) to industrial assembly tasks ([[http:// |

| KnowRob is an open-source project hosted at [[http:// | KnowRob is an open-source project hosted at [[http:// | ||

| Line 51: | Line 52: | ||

| human robot interaction (SAPHARI). | human robot interaction (SAPHARI). | ||

| Further information, | Further information, | ||

| - | use-cases can be found at the [[http:// | + | use-cases can be found at the [[http:// |

| website]]. | website]]. | ||

| Line 58: | Line 59: | ||

| ==== CRAM -- Virtual Robot Scenarios in Gazebo ==== | ==== CRAM -- Virtual Robot Scenarios in Gazebo ==== | ||

| + | {{ : | ||

| **Main Objective: | **Main Objective: | ||

| for human-sized robots. This is done using ROS, the Gazebo robot | for human-sized robots. This is done using ROS, the Gazebo robot | ||

| Line 65: | Line 67: | ||

| and closing drawers and doors. | and closing drawers and doors. | ||

| This involves designing virtual environments for Gazebo and/or writing robot plans in Lisp using the CRAM high-level language, sending commands to virtual PR2 or REEM(-C) robots in Gazebo, and manipulating the artificial environment in there. The connection to an elaborate high-level system holds a lot of interesting opportunities. | This involves designing virtual environments for Gazebo and/or writing robot plans in Lisp using the CRAM high-level language, sending commands to virtual PR2 or REEM(-C) robots in Gazebo, and manipulating the artificial environment in there. The connection to an elaborate high-level system holds a lot of interesting opportunities. | ||

| + | {{ : | ||

| The produced code will, when working in a simulated environment, | The produced code will, when working in a simulated environment, | ||

| Line 72: | Line 75: | ||

| contributions to the software library that can be used as part of a | contributions to the software library that can be used as part of a | ||

| robot' | robot' | ||

| + | |||

| + | Contact: [[team/ | ||

| ==== Topic 2: CRAM -- Symbolic Reasoning Tools with Bullet ==== | ==== Topic 2: CRAM -- Symbolic Reasoning Tools with Bullet ==== | ||

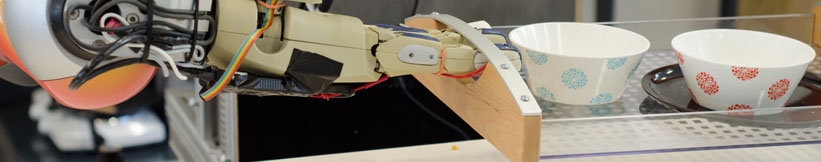

| {{ : | {{ : | ||

| - | **Main Objective: | + | **Main Objective: |

| - | **Task Difficulty: | + | Possible sub-projects: |

| + | **Task Difficulty: | ||

| + | {{ : | ||

| **Requirements: | **Requirements: | ||

| - | **Expected Results:** We expect operational and robust contributions to the software library that can be used as part of a robot' | + | **Expected Results:** We expect operational and robust contributions to the software library that can be used as a part of robot' |

| + | For more information consult the [[http:// | ||

| + | |||

| + | Contact: [[team/ | ||

| ==== Topic 3: KnowRob -- Reasoning about 3D CAD models of objects ==== | ==== Topic 3: KnowRob -- Reasoning about 3D CAD models of objects ==== | ||

| < | < | ||

| Line 106: | Line 116: | ||

| to the software library that can be used as part of a robot' | to the software library that can be used as part of a robot' | ||

| program. | program. | ||

| + | |||

| + | Contact: [[team/ | ||

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: