Differences

This shows you the differences between two versions of the page.

| Both sides previous revisionPrevious revisionNext revision | Previous revisionNext revisionBoth sides next revision | ||

| teaching:gsoc2018 [2018/01/21 20:29] – balintbe | teaching:gsoc2018 [2018/02/20 19:22] – [Topic 3: Unreal - ROS 2 Integration] ahaidu | ||

|---|---|---|---|

| Line 4: | Line 4: | ||

| ====== Google Summer of Code 2018 ====== | ====== Google Summer of Code 2018 ====== | ||

| ~~NOTOC~~ | ~~NOTOC~~ | ||

| + | |||

| + | In the following we shortly present the [[# | ||

| + | |||

| + | For the **proposed topics** see [[# | ||

| + | |||

| + | For **Q/A** check out our [[https:// | ||

| + | |||

| + | |||

| + | ===== Software ===== | ||

| + | |||

| ===== pracmln ===== | ===== pracmln ===== | ||

| Line 37: | Line 47: | ||

| RoboSherlock builds on top of the ROS ecosystem and is able to wrap almost any existing perception algorithm/ | RoboSherlock builds on top of the ROS ecosystem and is able to wrap almost any existing perception algorithm/ | ||

| + | |||

| + | ===== openEASE -- Web-based Robot Knowledge Service ===== | ||

| + | |||

| + | OpenEASE is a generic knowledge database for collecting and analyzing experiment data. Its foundation is the KnowRob knowledge processing system and ROS, enhanced by reasoning mechanisms and a web interface developed for inspecting comprehensive experiment logs. These logs can be recorded for example from complex CRAM plan executions, virtual reality experiments, | ||

| + | |||

| + | The OpenEASE web interface as well as further information and publication material can be accessed through its publicly available [[http:// | ||

| + | |||

| + | ===== RobCoG - Robot Commonsense Games ===== | ||

| + | |||

| + | [[http:// | ||

| + | |||

| + | The games are split into two categories: (1) VR/Full Body Tracking with physics based interactions, | ||

| + | |||

| + | |||

| + | ===== CRAM - Cognition-enabled Robot Executive ===== | ||

| + | |||

| + | CRAM is a software toolbox for the design, implementation and deployment of cognition-enabled plan execution on autonomous robots. CRAM equips autonomous robots with lightweight reasoning mechanisms that can infer control decisions rather than requiring the decisions to be preprogrammed. This way CRAM-programmed autonomous robots are more flexible and general than control programs that lack such cognitive capabilities. CRAM does not require the whole reasoning domain to be stated explicitly in an abstract knowledge base. Rather, it grounds symbolic expressions into the perception and actuation routines and into the essential data structures of the control plans. CRAM includes a domain-specific language that makes writing reactive concurrent robot behavior easier for the programmer. It extensively uses the ROS middleware infrastructure. | ||

| + | |||

| + | CRAM is an open-source project hosted on [[https:// | ||

| + | [[http:// | ||

| + | and tutorials that help to get started. | ||

| Line 66: | Line 97: | ||

| **Requirements: | **Requirements: | ||

| language (CPython/ | language (CPython/ | ||

| - | (ideally SRL technques and logic) | + | (ideally SRL technques and logic). Knowledge about C/C++ will be very helpful. |

| **Expected Results:** The core components of pracmln, i.e. the learning | **Expected Results:** The core components of pracmln, i.e. the learning | ||

| Line 75: | Line 106: | ||

| **Contact: | **Contact: | ||

| + | **Remarks: | ||

| + | ==== Topic 2: Flexible perception pipeline manipulation for RoboSherlock ==== | ||

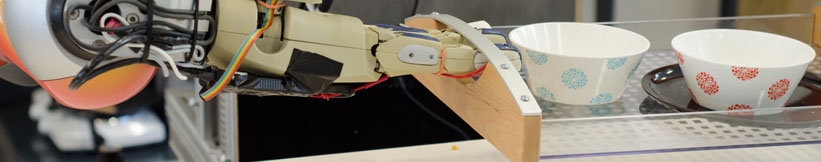

| - | ==== Topic 1: Multi-modal Cluttered Scene Analysis in Knowledge Intensive Scenarios ==== | + | {{ |

| - | {{ | + | **Main Objective:** RoboSherlock is based on the unstructured information management paradigm and uses the uima library at it's core. The c++ implementation of this library is limited multiple ways. In this topic you will develop a module in order to flexibly manage perception pipelines by extending the current implementation to enable new modalities and run pipelines in parallel. This involves implementing an API for pipeline and data handling that is rooted in the domain of UIMA. |

| - | **Main Objective:** In this topic we will develop algorithms that en- | + | **Task Difficulty:** The task is considered |

| - | able robots in a human environment | + | |

| - | cult and challenging scenarios. To achieve this the participant will | + | **Requirements: |

| - | develop annotators for RoboSherlock that are particularly aimed at | + | |

| - | object-hypotheses generation | + | |

| - | essentially means to generate regions/ | + | |

| - | form a single object or object-part. In particular this entails the de- | + | |

| - | velopment | + | |

| - | or object properties, as the likes of transparent objects, or cluttered, | + | |

| - | occluded scenes. The addressed scenarios include stacked, occluded | + | |

| - | objects placed on shelves, objects in drawers, refrigerators, | + | |

| - | ers, cupboards etc. In typical scenarios, these confined spaces also | + | |

| - | bare an underlying structure, which will be exploited, and used as | + | |

| - | background knowledge, to aid perception (e.g. stacked plates would | + | |

| - | show up as parallel lines using an edge detection). Specifically we | + | |

| - | would start from (but not necessarly limit ourselves to) the implemen- | + | |

| - | tation of two state-of-the-art algorithms described in recent papers: | + | |

| - | [1] Aleksandrs Ecins, Cornelia Fermuller | + | **Expected Results:** an extension to RoboShelrock that allows splitting |

| - | [2] Richtsfeld A., M ̈ | + | |

| - | orwald T., Prankl J., Zillich M. and Vincze | + | |

| - | M. - Segmentation | + | |

| - | IEEE/RSJ International Conference on Intelligent Robots and Sys- | + | |

| - | tems (IROS), 2012. | + | |

| - | **Task Difficulty: | + | **Assignement: |

| + | |||

| + | ---- | ||

| + | |||

| + | e-mail: [[team/ | ||

| + | |||

| + | chat: | ||

| + | |||

| + | ==== Topic 3: Unreal - ROS 2 Integration ==== | ||

| + | |||

| + | {{ : | ||

| + | |||

| + | Since [[https:// | ||

| + | |||

| + | **Task Difficulty: | ||

| | | ||

| - | **Requirements: | + | **Requirements: |

| - | **Expected Results:** Currently the RoboSherlock framework lacks good perception algorithms that can generate object-hypotheses in challenging scenarios(clutter | + | **Expected Results** |

| - | Contact: [[team/ferenc_balint-benczedi|Ferenc Bálint-Benczédi]] | + | Contact: [[team/andrei_haidu|Andrei Haidu]] |

| + | Chat: [[https:// | ||

| + | |||

| + | |||

| + | ==== Topic 4: Unreal Editor User Interface Development ==== | ||

| + | |||

| + | {{ : | ||

| + | |||

| + | For this topic we would like to extend the modules from RobCoG with intuitive Unreal Engine Editor Panels. This would allow easier and faster manipulation/ | ||

| + | |||

| + | **Task Difficulty: | ||

| + | |||

| + | **Requirements: | ||

| + | |||

| + | **Expected Results** We expect to have intuitive Unreal Engine UI Panels for editing, visualizing various RobCoG plugins data and features. | ||

| + | |||

| + | Contact: [[team/ | ||

| + | |||

| + | |||

| + | ==== Topic 5: Unreal | ||

| + | |||

| + | {{ : | ||

| + | |||

| + | For this topic we would like to create a live connection between openEASE and RobCoG. A user should be able to connect to openEASE from the Unreal Engine Editor and perform various queries. For example to verify if the items from the Unreal Engine world are present in the ontology of the robot. It should be able to upload new data directly from the editor. | ||

| + | |||

| + | **Task Difficulty: | ||

| + | |||

| + | **Requirements: | ||

| + | |||

| + | **Expected Results** We expect to have a live connection with between openEASE and the Unreal Engine editor. | ||

| + | |||

| + | Contact: [[team/ | ||

| + | |||

| + | |||

| + | ==== Topic 6: CRAM -- Visualizing Robot' | ||

| + | |||

| + | {{ : | ||

| + | |||

| + | **Main Objective: | ||

| + | |||

| + | **Task Difficulty: | ||

| + | |||

| + | |||

| + | {{ : | ||

| + | |||

| + | **Requirements: | ||

| + | * Familiarity with functional programming paradigms: some functional programming experience is a requirement (preferred language is Lisp but Haskel, Scheme, OCaml, Clojure, Scala or similar will do); | ||

| + | * Experience with ROS (Robot Operating System). | ||

| + | |||

| + | **Expected Results:** We expect operational and robust contributions to the source code of the existing robot control system including documentation. | ||

| + | |||

| + | Contact: [[team/ | ||

| + | |||

| + | ==== Topic 7: Robot simulation in Unreal Engine with PhysX ==== | ||

| + | |||

| + | {{ : | ||

| + | |||

| + | **Main Objective: | ||

| + | |||

| + | **Task Difficulty: | ||

| + | level, as it requires programming skills of various frameworks (Unreal Engine, | ||

| + | PhysX), expertise in robotic simulation and physics engines. | ||

| + | |||

| + | **Requirements: | ||

| + | of the Unreal Engine and PhysX API. Experience in robotics and robotic simulation is a plus. | ||

| + | **Expected Results** We expect to be able to simulate robots in unreal, have support and able to control standard joints. | ||

| + | Contact: [[team/ | ||

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: