Differences

This shows you the differences between two versions of the page.

| Both sides previous revisionPrevious revisionNext revision | Previous revisionNext revisionBoth sides next revision | ||

| teaching:gsoc2018 [2018/01/17 18:44] – nyga | teaching:gsoc2018 [2018/01/22 09:27] – [RoboSherlock -- Framework for Cognitive Perception] ahaidu | ||

|---|---|---|---|

| Line 30: | Line 30: | ||

| package in the Python package index ([[https:// | package in the Python package index ([[https:// | ||

| + | |||

| + | ===== RoboSherlock -- Framework for Cognitive Perception ===== | ||

| + | |||

| + | RoboSherlock is a common framework for cognitive perception, based on the principle of unstructured information management (UIM). UIM has proven itself to be a powerful paradigm for scaling intelligent information and question answering systems towards real-world complexity (i.e. the Watson system from IBM). Complexity in UIM is handled by identifying (or hypothesizing) pieces of | ||

| + | structured information in unstructured documents, by applying ensembles of experts for annotating information pieces, and by testing and integrating these isolated annotations into a comprehensive interpretation of the document. | ||

| + | |||

| + | RoboSherlock builds on top of the ROS ecosystem and is able to wrap almost any existing perception algorithm/ | ||

| + | |||

| + | ===== openEASE -- Web-based Robot Knowledge Service ===== | ||

| + | |||

| + | OpenEASE is a generic knowledge database for collecting and analyzing experiment data. Its foundation is the KnowRob knowledge processing system and ROS, enhanced by reasoning mechanisms and a web interface developed for inspecting comprehensive experiment logs. These logs can be recorded for example from complex CRAM plan executions, virtual reality experiments, | ||

| + | |||

| + | The OpenEASE web interface as well as further information and publication material can be accessed through its publicly available [[http:// | ||

| ===== Proposed Topics ===== | ===== Proposed Topics ===== | ||

| Line 65: | Line 78: | ||

| **Contact: | **Contact: | ||

| + | |||

| + | |||

| + | ==== Topic 2: Felxible perception pipeline manipulation for RoboSherlock ==== | ||

| + | |||

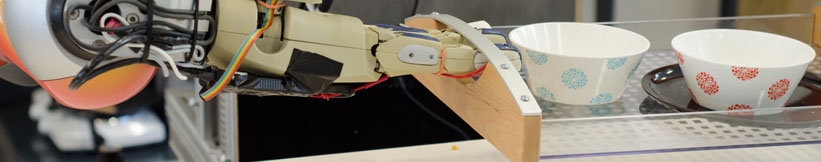

| + | {{ : | ||

| + | |||

| + | **Main Objective: | ||

| + | |||

| + | **Task Difficulty: | ||

| + | | ||

| + | **Requirements: | ||

| + | |||

| + | **Expected Results:** an extension to RoboShelrock that allows splitting and joingin pipelines, executing them in parallel, merging results from multiple types of cameras etc. | ||

| + | |||

| + | Contact: [[team/ | ||

| + | |||

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: