Differences

This shows you the differences between two versions of the page.

| Both sides previous revisionPrevious revisionNext revision | Previous revisionNext revisionBoth sides next revision | ||

| teaching:gsoc2017 [2017/02/08 08:11] – [Topic 1: Multi-modal Cluttered Scene Analysis in Knowledge Intensive Scenarios] lisca | teaching:gsoc2017 [2017/02/08 08:49] – [Topic 3: ROS with PR2 integration in Unreal Engine] lisca | ||

|---|---|---|---|

| Line 78: | Line 78: | ||

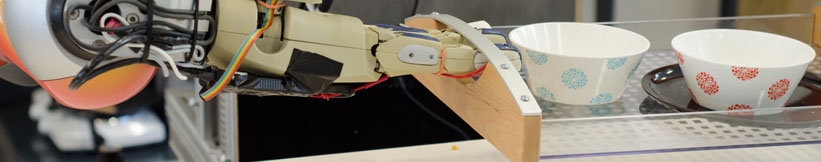

| {{ : | {{ : | ||

| - | **Main Objective: | + | **Main Objective: |

| - | edge detection). | + | able robots in a human environment to recognize objects in diffi- |

| + | cult and challenging scenarios. To achieve this the participant will | ||

| + | develop | ||

| + | object-hypotheses generation and merging. Generating a hypotheses | ||

| + | essentially means to generate regions/ | ||

| + | form a single object or object-part. In particular this entails the de- | ||

| + | velopment of segmentation algorithms for visually challenging scenes | ||

| + | or object properties, as the likes of transparent | ||

| + | occluded scenes. The addressed | ||

| + | objects placed on shelves, objects in drawers, refrigerators, | ||

| + | ers, cupboards etc. In typical scenarios, these confined spaces also | ||

| + | bare an underlying structure, which will be exploited, and used as | ||

| + | background knowledge, to aid perception (e.g. stacked plates would | ||

| + | show up as parallel lines using an edge detection). Specifically we | ||

| + | would start from (but not necessarly limit ourselves to) the implemen- | ||

| + | tation of two state-of-the-art algorithms described in recent papers: | ||

| + | [1] Ilya Lysenkov, Victor Eruhimov, and Gary Bradski, Recognition | ||

| + | and Pose Estimation of Rigid Transparent Objects with a Kinect Sen- | ||

| + | sor, 2013 Robotics: Science and Systems Conference (RSS), 2013. | ||

| + | [2] Richtsfeld A., M ̈ | ||

| + | orwald T., Prankl J., Zillich M. and Vincze | ||

| + | M. - Segmentation of Unknown Objects in Indoor Environments. | ||

| + | IEEE/RSJ International Conference on Intelligent Robots and Sys- | ||

| + | tems (IROS), 2012. | ||

| **Task Difficulty: | **Task Difficulty: | ||

| | | ||

| - | **Requirements: | + | **Requirements: |

| + | edge of CMake. Experience with PCL, OpenCV is prefered. | ||

| **Expected Results:** Currently the RoboSherlock framework lacks good perception algorithms that can generate object-hypotheses in challenging scenarios(clutter and/or occlusion). The expected results are several software components based on recent advances in cluttered scene analysis that are able to successfully recognized objects in the scenarios mentioned in the objectives, or a subset of these. | **Expected Results:** Currently the RoboSherlock framework lacks good perception algorithms that can generate object-hypotheses in challenging scenarios(clutter and/or occlusion). The expected results are several software components based on recent advances in cluttered scene analysis that are able to successfully recognized objects in the scenarios mentioned in the objectives, or a subset of these. | ||

| Line 106: | Line 130: | ||

| | | ||

| **Requirements: | **Requirements: | ||

| + | |||

| + | **Expected Results** We expect to be able to load URDF models of | ||

| + | varios robots (e.g. PR2) and be able to control them through ROS | ||

| + | in the game engine. In a similar fashion to a robotic simulator. | ||

| + | |||

| + | Contact: [[team/ | ||

| + | |||

| + | ==== Topic 3: ROS with PR2 integration in Unreal Engine ==== | ||

| + | |||

| + | {{ : | ||

| + | |||

| + | **Main Objective: | ||

| + | PR2 8 robot in [[https:// | ||

| + | |||

| + | **Task Difficulty: | ||

| + | level, as it requires programming skills of various frameworks (ROS, | ||

| + | Linux, Unreal Engine). | ||

| + | | ||

| + | **Requirements: | ||

| + | of the Unreal Engine API, ROS and Linux. Some experience in | ||

| + | robotics. | ||

| **Expected Results** We expect to enhance our currently developed robot learning game with realistic human-like grasping capabilities. These would allow users to interact more realistically with the given virtual environment. Having the possibility to manipulate objects of various shapes and sizes will allow to increase the repertoire of the executed tasks in the game. Being able to switch between specific grasps will allow us to learn grasping models specific to each manipulated object. | **Expected Results** We expect to enhance our currently developed robot learning game with realistic human-like grasping capabilities. These would allow users to interact more realistically with the given virtual environment. Having the possibility to manipulate objects of various shapes and sizes will allow to increase the repertoire of the executed tasks in the game. Being able to switch between specific grasps will allow us to learn grasping models specific to each manipulated object. | ||

| Line 111: | Line 156: | ||

| Contact: [[team/ | Contact: [[team/ | ||

| + | ==== Topic 4: Plan Library for Autonomous Robots performing Chemical Experiments ==== | ||

| + | |||

| + | {{ : | ||

| + | |||

| + | **Main Objective: | ||

| + | |||

| + | The successful candidate will use the domain specific language of [[http:// | ||

| + | of different containers, screwing and unscrewing the cap of a test tube, pouring a substance from a container into another container, operating a centrifuge, etc. | ||

| + | |||

| + | In the first phase of the project the successful candidate will make sure he/she is familiar with the domain specific language of CRAM toolbox and the parameters of the plan-based control programs. This phase will culminate with the student having coded a simple complete and fully runnable plan-based control program. | ||

| + | |||

| + | In the second phase of the project together with the successful candidate we will decide the set of manipulations which will be implemented in order to enable the robot to perform a simple and complete chemical experiment. | ||

| + | |||

| + | In the last phase of the project, the plan-based control programs developed in the second phase will be put together and the complete chemical experiment will be tested and fixed until it runs successfully. | ||

| + | |||

| + | The set of plan-based control programs resulted at the end of the program will represent the execution basis of the future experiments which will be done at IAI in order to figure out how an autonomous robot can reproduce a chemical experiment represented with semantic web tools. | ||

| + | |||

| + | **Requirements: | ||

| + | |||

| + | **Expected Results** We expect to successfully code a library of plan-based control programs which will enable an autonomous robot to manipulate the typical chemical laboratory equipment and perform a small class of chemical experiments in Gazebo simulator. | ||

| + | Contact: [[team/ | ||

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: