Table of Contents

SUTURO @RoboCup German Open 2020

Motivation and Goal

RoboCup@Home wants to provide a stage for service robots and the problems these robots can tackle, like interacting with humans and helping them in their everyday-life. In the cup, the participants have to perform different tests. The first test we try to accomplish is "Storing Groceries". In this setup, the robot has to recognize objects which are provided on a table and then has to sort them in a shelf. The shelf itself will already have some objects inside of it and the robot must categories them so he can correctly place the objects from the table in it. Furthermore, one door of the shelf will be closed, so the robot has to either open it on its own or ask for help. To accomplish the test, the robot has to be able to :

- Navigate between the table and shelf,

- Recognize the objects,

- Find categories for the objects/sort the objects,

- Grasp the objects,

- Put the objects in the shelf,

- Open the door or ask for help

Team

This projects development process is splitted into the following groups:

This projects development process is splitted into the following groups:

Planning

Teamleader: Jan Schmipf

Manipulation

Teamleader: Marc Stelter

Perception

Teamleader: Evan Kapitzke

Navigation

Teamleader: Marc Stelter

Knowledge

Teamleader: Paul Schipper

We are happy to present our whole team:

Merete Brommarius

merbom[at]uni-bremen.de

Group: Knowledge (NLP&NLG)

Course of studies: Linguistics B.A

Evan Kapitzke

evan[at]uni-bremen.de

Group: Perception

Course of studies: Informatics B.Sc.

Philipp Klein

phklein[at]uni-bremen.de

Group: Planning

Course of studies: Informatics B.Sc.

Tom-Eric Lehmkuhl

tomlehmk[at]uni-bremen.de

Group: Planning

Course of studies: Informatics B.Sc.

Jan Neumann

jan_neu[at]uni-bremen.de

Group: Manipulation

Course of studies: Informatics B.Sc.

Torge Olliges

tolliges[at]uni-bremen.de

Group: Planning

Course of studies: Informatics B.Sc.

Leonids Panagio de Oliveira Neto

leo_pan[at]uni-bremen.de

Group: Perception

Course of studies: Informatics B.Sc.

Fabian Rosenstock

fabrosen[at]uni-bremen.de

Group: Knowledge

Course of studies: Informatics B.Sc.

Jan Schmipf

schimpf[at]uni-bremen.de

Group: Planning

Course of studies: Informatics B.Sc.

Paul Schnipper

schnippa[at]uni-bremen.de

Group: Knowledge (NLP)

Course of studies: Informatics B.Sc.

Marc Stelter

stelter2[at]uni-bremen.de

Group: Navigation/Manipulation

Course of studies: Informatics B.Sc.

Jan-Frederick Stock

jastock[at]uni-bremen.de

Group: Perception

Course of studies: Informatics B.Sc.

Jeremias Thun

thun[at]uni-bremen.de

Group: Perception

Course of studies: Informatics B.Sc.

Fabian Weihe

weihe[at]uni-bremen.de

Group: Manipulation

Course of studies: Informatics B.Sc.

Methodology and implementation

Knowledge:

To fulfill complex tasks a robot needs knowledge and memory of its environment. While the robot acts in its world, it recognizes objects and manipulaties them through pick-and-place tasks. With the use of KnowRob, a belief state provides episodic memory of the robot's experience, recording the robot's memory of each cognitive activity. Via ontologies objects can be classified and put into context, which enables logical reasoning over the environment and intelligent decision making.

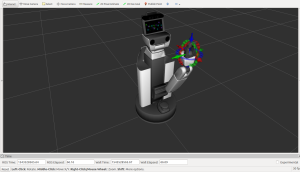

Navigation:

In order to perform the task, the robot needs to be able to autonomously and safely navigate within the world. This includes the generation of a representation of its surroundings as a 2D map, so that a path can be calculated to navigate the robot from point A to point B. Collision avoidance of objects which are not accounted for within the map, e.g. movable objects like chairs or people who cross the path of the robot, have to be accounted for and reacted to accordingly as well. The combination of all these aspects allows for a safe navigation of the robot within the environment.

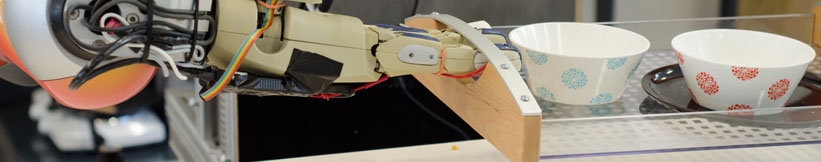

Manipulation:

Manipulation is the control of movements of joints and links of the robot. This allows the robot to make correct movements and accomplish orders coming from the upper layer. Depending on the values received the robot can for example enter objects or deposit them.

Giskardpy is an important library in the cacul of movements. The implementation of the manipulation is done thanks to the python language, ROS, and the iai_kinematic_sim and iai_hsr_sim libraries in addition to Giskardpy.

Planning:

Planning connects perception, knowledge, manipulation and navigation by creating plans for the robot activities. Here, we develop generic strategies for the robot so that he can decide, which action should be executed in which situation and in which order. One task of planning is the failure handling and providing of recovery strategies. To write the plans, we use the programming language lisp.

Perception:

The perception module has the task to process the visual data received by the robot's camera sensors. The cameras send point clouds forming the different objects in the robot's view port. By using frameworks such as RoboSherlock and OpenCV, the perception can calculate the geometric features of the scene. Based on this, it figures out, which point clouds could be objects of interest. It recognizes the shapes, colors and other features of objects and publishes this information to the other modules.

Link to open source and research

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: