Differences

This shows you the differences between two versions of the page.

| Both sides previous revisionPrevious revisionNext revision | Previous revisionNext revisionBoth sides next revision | ||

| jobs [2014/02/18 21:22] – tenorth | jobs [2015/03/19 12:25] – [Theses and Jobs] balintbe | ||

|---|---|---|---|

| Line 3: | Line 3: | ||

| If you are looking for a bachelor/ | If you are looking for a bachelor/ | ||

| - | == Web-based knowledge visualizations (BA/HiWi) == | ||

| - | |||

| - | {{ : | ||

| - | |||

| - | The knowledge base visualization allows to display semantic environment maps, | ||

| - | objects, human poses, trajectories and similar information stored in the | ||

| - | robot' | ||

| - | is written in Java and has grown old over the years. Recently, there has been | ||

| - | much progress in creating [[http:// | ||

| - | 3D visualizations]] for ROS that can also be accessed with a | ||

| - | [[http:// | ||

| - | a web-based version of the visualization using these techniques. | ||

| - | |||

| - | Requirements: | ||

| - | * Good programming skills | ||

| - | * Experience with JavaScript development | ||

| - | * Knowledge of Web technologies (HTML, XML, OWL) | ||

| - | |||

| - | Contact: [[team: | ||

| - | |||

| - | |||

| - | == Tools for knowledge acquisition from the Web (BA/ | ||

| - | |||

| - | There are several options for doing a project related to the acquisition of | ||

| - | knowledge from Web sources like online shops, repositories of object models, | ||

| - | recipe databases, etc. | ||

| - | |||

| - | Requirements: | ||

| - | * Programing skills (Java) | ||

| - | * Experience with Web languages and datamining techniques is helpful | ||

| - | * Depending on the focus of the project, experience with database technology, natural-language processing or computer vision may be helpful | ||

| - | |||

| - | Contact: [[team: | ||

| Line 89: | Line 56: | ||

| [2] http:// | [2] http:// | ||

| - | == HiWi position: Segmentation and interpretation of 3D object models (HiWi/MA) == | ||

| - | {{ :research:cup2-segmented.png?170}} | + | == Kitchen Activity Games in a Realistic Robotic Simulator (BA/ |

| + | {{ :research:gz_env1.png?200|}} | ||

| - | Competent object interaction requires knowledge about the structure | + | Developing new activities |

| - | composition of objects. In an ongoing research project, we are investigating | + | |

| - | how part-based object models can automatically be extracted from CAD models | + | |

| - | found on the Web, e.g. on the [[http://sketchup.google.com/3dwarehouse|3D warehouse]]. | + | |

| - | We are looking for a student research assistant to push this topic forward. | + | Requirements: |

| - | In close collaboration with the researchers of the Institute for Artificial | + | * Good programming skills in C/C++ |

| - | Intelligence, | + | * Basic physics/ |

| - | segmentation, | + | * Gazebo simulator basic tutorials |

| - | methods into the robot' | + | |

| - | the segmentation results. Depending on the results, cooperation on joint | + | |

| - | publications may be possible. | + | |

| - | This HiWi position can serve as a starting point for future Bachelor' | + | Contact: [[team: |

| - | Master' | + | |

| - | project that could be worked on as a Master' | + | == Integrating Eye Tracking in the Kitchen Activity Games (BA/MA)== |

| + | {{ :research: | ||

| + | |||

| + | Integrating the eye tracker in the [[http:// | ||

| Requirements: | Requirements: | ||

| - | * Good Java programming skills | + | * Good programming skills |

| - | * Basic knowledge | + | * Gazebo simulator basic tutorials |

| - | * Experience with working with 3D models | + | |

| + | Contact: [[team: | ||

| + | |||

| + | == Hand Skeleton Tracking Using Two Leap Motion Devices (BA/MA)== | ||

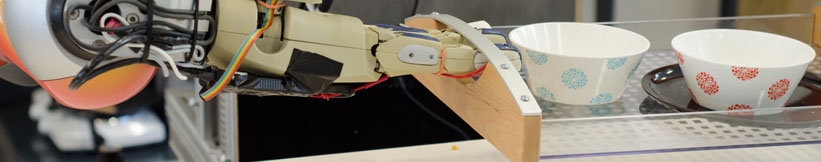

| + | {{ : | ||

| + | |||

| + | Improving the skeletal tracking offered by the [[https:// | ||

| + | |||

| + | The tracked hand can then be used as input for the Kitchen Activity Games framework. | ||

| + | |||

| + | Requirements: | ||

| + | * Good programming skills in C/C++ | ||

| + | |||

| + | Contact: [[team: | ||

| + | |||

| + | == Fluid Simulation in Gazebo (BA/MA)== | ||

| + | {{ : | ||

| + | |||

| + | [[http:// | ||

| + | |||

| + | Currently there is an [[http:// | ||

| + | |||

| + | The computational method for the fluid simulation is SPH (Smoothed-particle Dynamics), however newer and better methods based on SPH are currently present | ||

| + | and should be implemented (PCISPH/ | ||

| + | |||

| + | The interaction between the fluid and the rigid objects is a naive one, the forces and torques are applied only from the particle collisions (not taking into account pressure and other forces). | ||

| + | |||

| + | Another topic would be the visualization of the fluid, currently is done by rendering every particle. For the rendering engine [[http:// | ||

| + | |||

| + | Here is a [[https:// | ||

| + | |||

| + | Requirements: | ||

| + | * Good programming skills in C/C++ | ||

| + | * Interest in Fluid simulation | ||

| + | * Basic physics/ | ||

| + | * Gazebo simulator and Fluidix basic tutorials | ||

| + | |||

| + | Contact: [[team: | ||

| + | |||

| + | |||

| + | == Automated sensor calibration toolkit (MA)== | ||

| + | |||

| + | Computer vision | ||

| + | |||

| + | The topic for this master thesis is to develop an automated system for calibrating cameras, especially RGB-D cameras like the Kinect v2. | ||

| + | |||

| + | The system should be: | ||

| + | * independent of the camera type | ||

| + | * estimate intrinsics and extrinsics | ||

| + | * have depth calibration (case of RGBD) | ||

| + | * integrate capabilities from Halcon [1] | ||

| + | |||

| + | Requirements: | ||

| + | * Good programming skills in Python and C/C++ | ||

| + | * ROS, OpenCV | ||

| - | Contact: | + | [1] http:// |

| + | Contact: [[team: | ||

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: