This is an old revision of the document!

open research in robotics - introducing openEASE

Pizza Making Experiment Demonstrates the Potential of Open Research

in Robotics

- openEASE is a platform where robots can learn to perform complex tasks faster based on the experiences of other machines

- Users can retrieve memorized episodes and ask queries about what the robot saw, reasoned, and did during that episode – and what effects it caused

- The platform combines “big data” storage with knowledge processing, cloud-based computation, and web technology

- The first elaborate experiment on the platform includes two robots cooperating to make fresh pizza

When human beings hit a tennis ball or perform a similar action, an episodic memory is created. We remember the movement of the arm and how much force we applied. This helps us to not blast the ball over the fence again the next time. Robots can benefit from episodic memories as much as humans – machines learn to perform complicated tasks much faster when they can draw from experiences. To facilitate this process, a platform called openEASE was created, where everyone can contribute to a growing database of “robot memories”. The goal: Creating an open research environment where robotics scientists share their work and access data from other researchers, including the option to let their robots communicate directly with the database. openEASE was presented at the 2015 IEEE International Conference on Robotics and Automation from May 26-30 in Seattle, where one paper related to the openEASE project was awarded the Best Service Robotics Paper

- RoboSherlock: Unstructured Information Processing for Robot Perception Beetz, Michael; Balint-Benczedi, Ferenc; Blodow, Nico; Nyga, Daniel; Wiedemeyer, Thiemo; Marton, Zoltan-Csaba

and a second one was finalist for the Best Cognitive Robotics Paper Award

- openEASE – A Knowledge Processing Service for Robots and Robotics/AI Researchers Beetz, Michael; Tenorth, Moritz; Winkler, Jan

Building knowledge on previous research

The idea behind openEASE is that researchers worldwide focus on many specific problems to enable robots to work autonomously, but in order to perform complex tasks like preparing a meal, this knowledge has to be shared and the machines’ capabilities need to be combined. “Big data” storage, knowledge processing, cloud computing, and web technology are utilized to provide researchers with a database that allows an easy analysis of robot experiences, whether it’s their own or other scientists’ research. In the future, robotic systems will be able to access their own and other robot’s episodic memories directly as well. At the same time, the data from all experiments can be used in new projects and in teaching. All information is gathered automatically while a robot performs a task. The collected data includes positions of individual parts, images from the machine’s perception system, and other sensor and control signal streams. In the database all information is annotated with semantic indexing structures that are automatically generated by the interpreter of the robot control system. These episodic memories can help the robotic systems assess what they were doing and enable them to answer queries about what they did, why they did it, how they did it, what they saw when they did it, and what happened when they did it. A web-based graphical query interface reduces the time and effort for such analyses dramatically.

Robots can learn from human actions, too

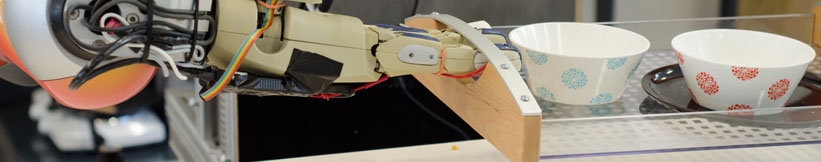

A kitchen scenario was chosen to test the functionality of openEASE and its accompanying software tools, because the tasks involved in preparing fresh food are highly complex. The first elaborate experiment on the platform concerns the preparation of pizza by two collaborating robots. Raphael, a PR2 robot with a Microsoft Kinect für Xbox One as its main perception device, retrieved different objects such as a tray and a bottle of ketchup and carried them wherever they were needed. Boxy, an individually designed robot with two Kuka manipulators, rolled out the dough, spread the tomato sauce, and added the toppings. When rolling out the dough, Boxy utilized the experience of a human being whose actions had been recorded previously, especially regarding the right amount of pressure and the movements of the rolling pin. The logs of the experiment allow to infer knowledge such as how the size of the pizza dough changed after each movement and what the robot arm’s trajectory was while reaching the dough. When Boxy was finished, Raphael took over again and placed the tray in the oven. Once the PR2 detected that a human had appeared and started the oven, it informed Boxy that the task was completed.

To open another road for robotic learning, data from simulators can also be added to openEASE to figure out the most efficient ways for completing specific tasks.

openEASE is open for registration

With all its detailed information, the knowledge base is an open research tool that highly improves the reproducibility of experimental results, e.g. by providing various tools for experiment summarization. Among them are automated video generation and a variety of infographic-like visualizations. openEASE also supports the analysis of perceptual capabilities of robotic agents, in particular when used in combination with RoboSherlock, a perception system which is equipped with a library of state-of-the-art perception methods. Researchers who are interested in using openEASE can register on the website in order to gain access to all tools and data. The team plans to provide an easily accessible interface for integrating new types of experiments and to constantly add new analysis modules to the system. This work is partly supported by the EU FP7 projects RoboHow (Grant Agreement Number 288533), ACat (600578), SAPHARI (287513), Sherpa (600958), and by the German Research Foundation.

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: