| Both sides previous revisionPrevious revisionNext revision | Previous revisionNext revisionBoth sides next revision |

| robocup19 [2018/11/29 21:49] – s_7teji4 | robocup19 [2019/01/09 08:31] – s_7teji4 |

|---|

| === Motivation and Goal=== | === Motivation and Goal=== |

| <html> | <html> |

| <div> | <div style="font-size:14px"> |

| <h5><b>Our goal</b>: We are participating in this years <b><a href="https://www.robocupgermanopen.de/en/major/athome">RoboCup@Home German Open</a></b>. <br>RoboCup@Home wants to provide a stage for service robots and the problems these robots can tackle, like interacting with humans and helping them in their everyday-life. In the cup, the participants have to perform different tests. The first test we try to accomplish is <i>"Storing Groceries"</i>. In this setup, the robot has to recognize objects which are provided on a table and then has to sort them in a shelf. The shelf itself will already have some objects inside of it and the robot must categories them so he can correctly place the objects from the table in it. Furthermore, one door of the shelf will be closed, so the robot has to either open it on its own or ask for help. To accomplish the test, the robot has to be able to : | <b>Our goal</b>: We are participating in this years <b><a href="https://www.robocupgermanopen.de/en/major/athome">RoboCup@Home German Open</a></b>. <br>RoboCup@Home wants to provide a stage for service robots and the problems these robots can tackle, like interacting with humans and helping them in their everyday-life. In the cup, the participants have to perform different tests. The first test we try to accomplish is <i>"Storing Groceries"</i>. In this setup, the robot has to recognize objects which are provided on a table and then has to sort them in a shelf. The shelf itself will already have some objects inside of it and the robot must categories them so he can correctly place the objects from the table in it. Furthermore, one door of the shelf will be closed, so the robot has to either open it on its own or ask for help. To accomplish the test, the robot has to be able to : |

| <ul style="list-style-type:square"> | <ul style="list-style-type:square"> |

| <li>Navigate between the table and shelf,</li> | <li>Navigate between the table and shelf,</li> |

| <li>Open the door or ask for help</li> | <li>Open the door or ask for help</li> |

| </ul> | </ul> |

| </h5> | |

| </div> | </div> |

| </html> | </html> |

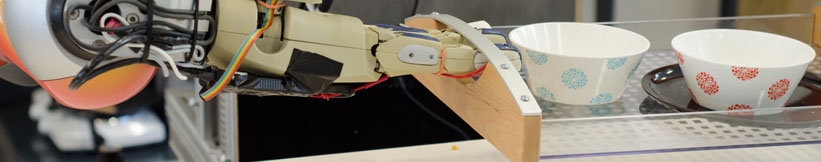

| Manipulation is the control of movements of joints and links of the robot. This allows the robot to make correct movements and accomplish orders coming from the upper layer. Depending on the values received the robot can for example enter objects or deposit them. | Manipulation is the control of movements of joints and links of the robot. This allows the robot to make correct movements and accomplish orders coming from the upper layer. Depending on the values received the robot can for example enter objects or deposit them. |

| Giskardpy is an important library in the cacul of movements. The implementation of the manipulation is done thanks to the python language, ROS, and the iai_kinematic_sim and iai_hsr_sim libraries in addition to Giskardpy. | Giskardpy is an important library in the cacul of movements. The implementation of the manipulation is done thanks to the python language, ROS, and the iai_kinematic_sim and iai_hsr_sim libraries in addition to Giskardpy. |

| | </html>{{ :robocupfiles:toya02.png?nolink&300 |}} <html> |

| </p> | </p> |

| <p><b><u>Planning</u>:</b><br> | <p><b><u>Planning</u>:</b><br> |

| </p> | </p> |

| <p><b><u>Perception</u>:</b><br> | <p><b><u>Perception</u>:</b><br> |

| </html>{{ :robocupfiles:objects_perception01.png?nolink&200 |}}<html> | |

| The perception module has the task to process the visual data received by the robot's camera sensors. The cameras send point clouds forming the different objects in the robot's view port. By using frameworks such as RoboSherlock and OpenCV, the perception can calculate the geometric features of the scene. Based on this, it figures out, which point clouds could be objects of interest. It recognizes the shapes, colors and other features of objects and publishes this information to the other modules. | The perception module has the task to process the visual data received by the robot's camera sensors. The cameras send point clouds forming the different objects in the robot's view port. By using frameworks such as RoboSherlock and OpenCV, the perception can calculate the geometric features of the scene. Based on this, it figures out, which point clouds could be objects of interest. It recognizes the shapes, colors and other features of objects and publishes this information to the other modules. |

| | </html>{{ :robocupfiles:objects_perception01.png?nolink&200 |}}<html> |

| </p> | </p> |

| </div> | </div> |

| |

| === Link to open source and research === | === Link to open source and research === |

| | <html> |

| | <ul style="list-style-type:disc"> |

| | <li><a href="https://github.com/Suturo1819">SUTURO 18/19</a></li> |

| | <li><a href="https://github.com/SemRoCo/giskardpy">Giskardpy</a></li> |

| | <li><a href="https://github.com/code-iai">Code IAI</a></li> |

| | </ul> |

| | </html> |