Differences

This shows you the differences between two versions of the page.

| Both sides previous revisionPrevious revisionNext revision | Previous revisionNext revisionBoth sides next revision | ||

| robocup19 [2018/11/29 21:49] – s_7teji4 | robocup19 [2018/11/29 22:13] – s_7teji4 | ||

|---|---|---|---|

| Line 52: | Line 52: | ||

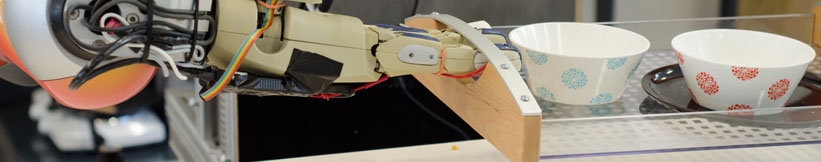

| Manipulation is the control of movements of joints and links of the robot. This allows the robot to make correct movements and accomplish orders coming from the upper layer. Depending on the values received the robot can for example enter objects or deposit them. | Manipulation is the control of movements of joints and links of the robot. This allows the robot to make correct movements and accomplish orders coming from the upper layer. Depending on the values received the robot can for example enter objects or deposit them. | ||

| Giskardpy is an important library in the cacul of movements. The implementation of the manipulation is done thanks to the python language, ROS, and the iai_kinematic_sim and iai_hsr_sim libraries in addition to Giskardpy. | Giskardpy is an important library in the cacul of movements. The implementation of the manipulation is done thanks to the python language, ROS, and the iai_kinematic_sim and iai_hsr_sim libraries in addition to Giskardpy. | ||

| + | </ | ||

| </p> | </p> | ||

| < | < | ||

| Line 57: | Line 58: | ||

| </p> | </p> | ||

| < | < | ||

| - | </ | ||

| The perception module has the task to process the visual data received by the robot' | The perception module has the task to process the visual data received by the robot' | ||

| + | </ | ||

| </p> | </p> | ||

| </ | </ | ||

| Line 64: | Line 65: | ||

| === Link to open source and research === | === Link to open source and research === | ||

| + | < | ||

| + | < | ||

| + | < | ||

| + | < | ||

| + | < | ||

| + | </ | ||

| + | </ | ||

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: