Differences

This shows you the differences between two versions of the page.

| Both sides previous revisionPrevious revisionNext revision | Previous revisionNext revisionBoth sides next revision | ||

| robocup19 [2018/11/29 16:09] – s_7teji4 | robocup19 [2018/11/29 21:49] – s_7teji4 | ||

|---|---|---|---|

| Line 17: | Line 17: | ||

| </ | </ | ||

| === Team === | === Team === | ||

| - | {{ : | + | {{ : |

| < | < | ||

| + | <br> | ||

| <p align=" | <p align=" | ||

| Alina Hawkin <br> hawkin[at]uni-bremen.de | Alina Hawkin <br> hawkin[at]uni-bremen.de | ||

| Line 37: | Line 38: | ||

| Vanessa Hassouna <br> hassouna[at]uni-bremen.de | Vanessa Hassouna <br> hassouna[at]uni-bremen.de | ||

| </p> | </p> | ||

| - | </ | + | < |

| - | === Methodology and implementation=== | + | < |

| + | </ | ||

| + | ===Methodology and implementation=== | ||

| < | < | ||

| <div display=" | <div display=" | ||

| Line 44: | Line 47: | ||

| < | < | ||

| In order to perform the task, the robot needs to be able to autonomously and safely navigate within the world. This includes the generation of a representation of its surroundings as a 2D map, so that a path can be calculated to navigate the robot from point A to point B. Collision avoidance of objects which are not accounted for within the map, e.g. movable objects like chairs or people who cross the path of the robot, have to be accounted for and reacted to accordingly as well. The combination of all these aspects allows for a safe navigation of the robot within the environment. | In order to perform the task, the robot needs to be able to autonomously and safely navigate within the world. This includes the generation of a representation of its surroundings as a 2D map, so that a path can be calculated to navigate the robot from point A to point B. Collision avoidance of objects which are not accounted for within the map, e.g. movable objects like chairs or people who cross the path of the robot, have to be accounted for and reacted to accordingly as well. The combination of all these aspects allows for a safe navigation of the robot within the environment. | ||

| + | <iframe width=" | ||

| </ | </ | ||

| < | < | ||

| Line 53: | Line 57: | ||

| </p> | </p> | ||

| < | < | ||

| + | </ | ||

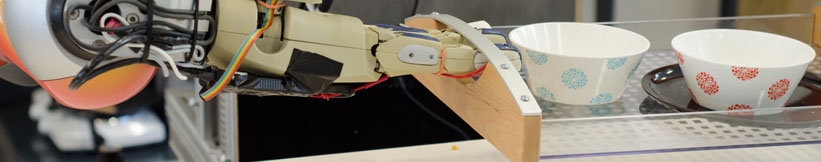

| The perception module has the task to process the visual data received by the robot' | The perception module has the task to process the visual data received by the robot' | ||

| </p> | </p> | ||

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: