Differences

This shows you the differences between two versions of the page.

| Both sides previous revisionPrevious revisionNext revision | Previous revisionNext revisionBoth sides next revision | ||

| robocup19 [2018/11/29 16:08] – s_7teji4 | robocup19 [2018/11/29 16:12] – s_7teji4 | ||

|---|---|---|---|

| Line 17: | Line 17: | ||

| </ | </ | ||

| === Team === | === Team === | ||

| - | {{ : | + | {{ : |

| < | < | ||

| + | <br> | ||

| <p align=" | <p align=" | ||

| Alina Hawkin <br> hawkin[at]uni-bremen.de | Alina Hawkin <br> hawkin[at]uni-bremen.de | ||

| Line 37: | Line 38: | ||

| Vanessa Hassouna <br> hassouna[at]uni-bremen.de | Vanessa Hassouna <br> hassouna[at]uni-bremen.de | ||

| </p> | </p> | ||

| + | <br> | ||

| + | <br> | ||

| </ | </ | ||

| === Methodology and implementation=== | === Methodology and implementation=== | ||

| Line 51: | Line 54: | ||

| < | < | ||

| Planning connects perception, knowledge, manipulation and navigation by creating plans for the robot activities. Here, we develop generic strategies for the robot so that he can decide, which action should be executed in which situation and in which order. One task of planning is the failure handling and providing of recovery strategies. | Planning connects perception, knowledge, manipulation and navigation by creating plans for the robot activities. Here, we develop generic strategies for the robot so that he can decide, which action should be executed in which situation and in which order. One task of planning is the failure handling and providing of recovery strategies. | ||

| + | </p> | ||

| + | < | ||

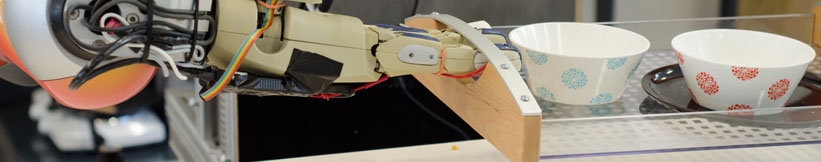

| + | The perception module has the task to process the visual data received by the robot' | ||

| </p> | </p> | ||

| </ | </ | ||

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: