Table of Contents

Lesson 01 - Primer to Pick and Place

In this lesson, you'll see the setup of a very simple pick and place scenario. It consists of the following parts:

- In Gazebo, the following elements are used:

- A ground map

- A table with drawers

- Two mugs on the table

- A PR2 robot

- A CRAM lisp package setup, containing all necessary dependencies

- The gazebo_perception_process_module, an interface between CRAM and Gazebo

- A semantic map of the environment

Prerequisites

It is assumed that you successfully completed the installation of the CRAM system before starting this lesson.

Getting the Code

To download the lesson, in your ROS workspace, do the following:

$ rosws set lesson01 --git https://github.com/ai-seminar/cram-tutorials.git $ rosws update lesson01 $ source ~/.bashrc # To reread the available ROS packages in the package path

If you're not using rosws, although it is strongly recommended, you can get the repository here.

Preparation

To make sure that all software modules are built correctly and all dependencies are met, do

$ rosmake lesson01

If no errors occur during the compilation process, you're set.

Getting started

First, start the Slime REPL. This is the command line from which you will execute Lisp code and control the CRAM system. If you get lost when using Emacs, this cheat sheet might help you.

$ rosrun roslisp_repl repl

Now, load the lesson code. In order to do so, press , when the CL-USER> prompt shows up. After that, enter:

Command: ros-load-system

and hit Return. In the now appearing prompt, enter:

Enter package: lesson01

and confirm the next prompt

ROS package `lesson01', System (default `lesson01'):

by just pressing Return again. This will load the ASDF system lesson01 from within the ROS package lesson01. The REPL guesses the correct default system here because there is only one system in the .asdf file. When loading the system for the first time, a lot of Lisp system dependencies are compiled. This can take quite some time.

If everything went well, you should see something similar to the following:

To get into the correct lesson package, press ,, enter !p, press Return and enter:

Package: LESSON01

From now on, you will have a prompt like

LESSON01>

which will let you execute directly from the lesson 01 code package.

Starting the simulation environment

Now, back in the terminal, start

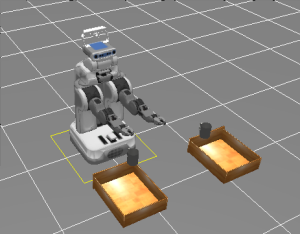

$ roslaunch lesson01 lesson01.launch

This will start Gazebo, spawn a PR2 robot and a table with two mugs on it.

The PR2 and the mugs are dynamic objects while the table is fixed to the ground, i.e. you can't move it. (If you encounter problems with parts of the simulation not showing up correctly, see here.)

The PR2 and the mugs are dynamic objects while the table is fixed to the ground, i.e. you can't move it. (If you encounter problems with parts of the simulation not showing up correctly, see here.)

In yet another terminal, open rviz:

$ rosrun rviz rviz

This will show you the PR2 model and the bottom map if properly configured:

Together with Gazebo, an instance of the ROS master (roscore) was started when executing the roslaunch command. All components using ROS connect to this master and exchange data through it's messaging system. In order to connect our REPL to it, enter the following command in the Slime REPL:

LESSON01> (start-lesson)

You will see output similar to this:

[(LESSON01) INFO] 1364288497.123: Starting lesson 01. [(ROSLISP TOP) INFO] 1364288497.331: Node name is /cram_hl_316821_1364288497 [(ROSLISP TOP) INFO] 1364288497.332: Namespace is / [(ROSLISP TOP) INFO] 1364288497.332: Params are NIL [(ROSLISP TOP) INFO] 1364288497.333: Remappings are: [(ROSLISP TOP) INFO] 1364288497.333: master URI is 127.0.0.1:11311 [(ROSLISP TOP) INFO] 0.000: Node startup complete [(ROSNODE) INFO] 445.472: ROS init #<FUNCTION CRAM-ROSLISP-COMMON::ROS-TIME-INIT>. [(ROSNODE) INFO] 445.472: ROS init #<FUNCTION CRAM-ROSLISP-COMMON::ROS-TF-INIT>. [(ROSNODE) INFO] 445.480: ROS init #<FUNCTION CRAM-GAZEBO-UTILITIES::INIT-CRAM-GAZEBO-UTILITIES>. [(ROSNODE) INFO] 445.484: ROS init #<FUNCTION LOCATION-COSTMAP::LOCATION-COSTMAP-VIS-INIT>. [(ROSNODE) INFO] 445.484: ROS init #<FUNCTION OCCUPANCY-GRID-COSTMAP::INIT-OCCUPANCY-GRID-COSTMAP>. [(ROSNODE) INFO] 445.484: ROS init #<FUNCTION POINT-HEAD-PROCESS-MODULE::INIT-POINT-HEAD-ACTION>. [(ROSNODE) INFO] 445.610: ROS init #<FUNCTION PR2-NAVIGATION-PROCESS-MODULE::INIT-PR2-NAVIGATION-PROCESS-MODULE>. [(ROSNODE) INFO] 445.747: ROS init #<FUNCTION SEMANTIC-MAP-COLLISION-ENVIRONMENT::INIT-SEMANTIC-MAP-COLLISION-ENVIRONMENT>. [(ROSNODE) INFO] 445.747: ROS init #<FUNCTION PR2-MANIPULATION-PROCESS-MODULE::INIT-COLLISION-ENVIRONMENT>. [(ROSNODE) INFO] 445.747: ROS init #<FUNCTION PR2-MANIPULATION-PROCESS-MODULE::INIT-PR2-MANIPULATION-PROCESS-MODULE>.

The second line, starting with Node name is includes the ROS node name of your current CRAM node instance. You will need this later.

To try out whether the components are connected properly, you can use the Gazebo/CRAM interface to get the pose of one of the mugs:

LESSON01> (cram-gazebo-utilities:get-model-pose "mug_1")

which should now yield the pose for the mug in question:

#<POSE-STAMPED FRAME-ID: "map", STAMP: 0.0 #<3D-VECTOR (1.466173d0 0.398379d0 0.895762d0)> #<QUATERNION (6.067517d-4 -0.001260d0 0.003830d0 0.999991d0)>>

The strange precision here results from the physics based simulation in Gazebo.

The Semantic Environment

The given lesson environment includes a simplistic semantic map for the scene. It includes the table which is placed in front of the PR2 robot. In terms of the semantic map, this table is called popcorn_table and can be referred to from within CRAM. When rviz is running, add a new display entity of type Marker.

This display type can visualize ROS messages of type visualization_msgs::Marker, which are boxes, spheres, meshes, and other 3D object types in different colors. As Marker Topic, you choose the node name of the current CRAM instance you initiated from within the Slime REPL, as mentioned earlier, and select the topic semantic_map_markers under it.

You can now visualize the semantic map using the following code from the REPL:

LESSON01> (sem-map-coll-env:publish-semantic-map-markers)

Adding another display entity of type CollisionMap and choosing the topic /kipla/location_costmap as the Topic parameter enables rviz to visualize the output of location costmap related designator resolutions:

LESSON01> (top-level (with-designators ((loc (location `((desig-props:on Cupboard) (desig-props:name "popcorn_table"))))) (reference loc)))

This will yield a displayed costmap like this:

and a pose on the table which was drawn from the shown samples, i.e.

and a pose on the table which was drawn from the shown samples, i.e.

#<POSE-STAMPED FRAME-ID: "map", STAMP: 0.0 #<3D-VECTOR (1.7d0 -0.55d0 0.9d0)> #<QUATERNION (0.0d0 0.0d0 0.0d0 1.0d0)>>

Executing Robot Plans

When picking up an object using the CRAM plan library, two functions are of major importance:

- perceive-object

- object-in-hand

The function perceive-object transforms the vague description of the object to pick up into a 3D pose information. Using the gazebo_perception_process_module, the effective model state of the simulated object is extracted and transformed into an object pose. All of this happens internally and can be triggered using:

LESSON01> (top-level (move-away-arms) (with-process-modules (with-designators ((mug (object `((desig-props:name "mug_1"))))) (cram-plan-library:perceive-object 'cram-plan-library:the mug))))

This results in an object designator description defining the object in question:

#<OBJECT-DESIGNATOR ((AT #<LOCATION-DESIGNATOR ((CRAM-DESIGNATOR-PROPERTIES:POSE #<POSE-STAMPED FRAME-ID: "map", STAMP: 0.0 #<3D-VECTOR (1.4769388895789999d0 0.39931264675550426d0 0.8953908542232183d0)> #<QUATERNION (-3.0138609589887126d-4 -4.576985427639737d-4 -2.7947104564445284d-4 0.999999810787182d0)>>)) {1008FB78D1}>) (NAME "mug_1")) {1008FB7C91}>

The object-in-hand function is actually a goal the robot tries to achieve. It is called using

LESSON01> (top-level (move-away-arms) (with-process-modules (with-designators ((mug (object `((desig-props:name "mug_1"))))) (let ((mug-perceived (cram-plan-library:perceive-object 'cram-plan-library:the mug))) (achieve `(cram-plan-library:object-in-hand ,mug-perceived))))))

Either function relies on whether the robot stands at a position from where it probably can see or reach the location on the table. A location costmap is generated for locations to stand at and a random weighted sample is drawn from this probability distribution:

In the lesson code (specifically in lesson01.lisp), there is a function for executing both parts in order:

(defun get-mug-1 () ...)

You can call this function by simply starting it from the REPL:

LESSON01> (get-mug-1)

It will also take care of functions like moving the robot's spine up all the way and getting the arms out of the way before doing navigation/manipulation tasks. The PR2 will drive around and will try to get into a suitable position for grasping. It then will try to grasp the object.

Once the object is being grasped, the robot will execute a motion to achieve the following grasp and carrying pose:

Handle-based grasping

The fact that the PR2 grasps the mug on it's handle results from two things:

- An extensive reasoning machinery that checks for the existance of handles on an object to grasp, plus an algorithm that calculates the respective grasp poses

- The definition of the object handle in the source code

The system relies on information given to it about handles. This information if thought to be stored in an external knowledge source such as KnowRob but can be given by hand here.

The important part in the source code for handle definition is found here:

(with-designators ((handle-loc (location `((desig-props:pose ,(tf:make-pose (tf:make-3d-vector -0.12 0.0 0.07) (tf:euler->quaternion :ax (/ pi 2))))))) (mug-handle (object `((desig-props:type desig-props:handle) (desig-props:at ,handle-loc)))) (mug (object `((desig-props:name "mug_1") (desig-props:handle ,mug-handle)))))

The variable handle-loc defines the relative pose of the handle with respect to the bottom-most center point of the mug, i.e. the pose that is returned by the gazebo_perception_process_module.

mug-handle then defined a handle-object that is then placed as a handle into the actual mug-object, mug.

Completing the Pick and Place Task

To make the robot execute the task at hand, the function displace-mug-1 has been prepared. You can find it in lesson01.lisp. It is started by:

LESSON01> (displace-mug-1)

During the runtime of this function, the robot will try to get hold of the object (i.e., pick it up) and then put it down on a different spot of the table.

Since finding a good spot to put the object down on the table isn't that easy, it is possible that the PR2 will drive around a bit and try different spots before it finds a suitable location.

For putting down the object, the same techniques with respect to location costmaps are used as in the pick-up part of this tutorial.

Pitfalls and known issues

In case the table doesn't show up completely when starting the lesson launchfile (and, Gazebo in particular), you have to restart the Gazebo client GUI. The problem will show you a scene similar to this one:

Just close the Gazebo window and restart the client, using:

$ `rospack find gazebo`/gazebo/bin/gzclient -g `rospack find gazebo`/lib/libgazebo_ros_paths_plugin.so

The (simulated) table is physically there, but the visual is missing. The simulation could go on without it, but for the scenery's sake, you can get it back into the scene with this fix.

— Winkler, Jan 2013/03/28 11:10