TUM Kitchen Data Set

Introduction

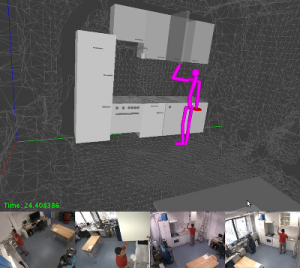

The TUM Kitchen Data Set is provided to foster research in the areas of markerless human motion capture, motion segmentation and human activity recognition. It should aid researchers in these fields by providing a comprehensive collection of sensory input data that can be used to try out and to verify their algorithms. It is also meant to serve as a benchmark for comparative studies given the manually annotated “ground truth” labels of the underlying actions. The recorded activities have been selected with the intention to provide realistic and seemingly natural motions, and consist of everyday manipulation activities in a natural kitchen environment.

The TUM Kitchen Data Set is provided to foster research in the areas of markerless human motion capture, motion segmentation and human activity recognition. It should aid researchers in these fields by providing a comprehensive collection of sensory input data that can be used to try out and to verify their algorithms. It is also meant to serve as a benchmark for comparative studies given the manually annotated “ground truth” labels of the underlying actions. The recorded activities have been selected with the intention to provide realistic and seemingly natural motions, and consist of everyday manipulation activities in a natural kitchen environment.

Description of the Data

The TUM Kitchen Data Set contains observations of several subjects setting a table in different ways. Some perform the activity like a robot would do, transporting the items one-by-one, other subjects behave more natural and grasp as many objects as they can at once. In addition, there are two episodes where the subjects repetitively performed reaching and grasping actions. Applications of the data are mainly in the areas of human motion tracking, motion segmentation, and activity recognition.

To provide sufficient information for recognizing and characterizing the observed activities, we recorded the following multi-modal sensor data:

- Video data from four fixed, overhead cameras (384×288 pixels RGB color or 780×582 pixels raw Bayer pattern, at 25Hz)

- Motion capture data (*.bvh file format) extracted from the videos using our markerless full-body MeMoMan tracker

- RFID tag readings from three fixed readers embedded in the environment (sample rate 2Hz)

- Magnetic (reed) sensors detecting when a door or drawer is opened. (sample rate 10Hz)

- Action labels (the data is labeled separately for the left hand, the right hand, and the trunk of the person)

A more detailed documentation of the data set is provided in the following Technical Report: The TUM Kitchen Data Set

Publications

News

- March 16, 2010: Angela Yao, ETH Zürich, contributed labels for almost all remaining sequences. Thanks a lot!

- February 8, 2010: Both the BVH files and the extrinsic calibration have been adapted to match the coordinate system of the CSV files (previously, there was an erroneous rotation that resulted in inverted values for y and z).

- February 8, 2010: Added MATLAB calibration provided by Jürgen Gall, BIWI, ETH Zürich.

- February 5, 2010: The JPG sequences now all start at frame 0, allowing for using the first part for calculating the background subtraction.

- October 27, 2009: Fixed a small issue regarding the frame time of the BVH files, which was given too low before. The corrected files are available below.

- September 18, 2009: Eight new sequences have been added to the data set, and some problems with different coordinate systems are resolved.

- July 28, 2009: The paper “The TUM Kitchen Data Set of Everyday Manipulation Activities for Motion Tracking and Action Recognition” desribing the data set has been accepted for publication during the THEMIS workshop in conjunction with ICCV 2009.

- May 18, 2009: Data set released to the public. If you encounter problems or have ideas what to improve, please contact Moritz Tenorth or Jan Bandouch. We will add more data and documentation in the future.

Episodes

You can find the data here: http://ias.cs.tum.edu/software/kitchen-activity-data

Tracking starts at the video frame startframe and finishes at endframe, i.e. the first row in the pose files of episode 0-0 corresponds to the video frame 240 in that episode.

The task descriptions read as follows:

- stt: set the table

- robot: transport each object separately, as done by an inefficient robot

- human: take several objects at once, as humans usually do

- repetitive: iteratively picking up and putting down objects from and to different places

The video data is available in three different formats:

- avi: xvid encoded RGB color video of size 384×288 pixels

- raw: mpeg4 (lavc) encoded video of original raw camera input stream (monochrome Bayer pattern RGGB) of size 780×582 pixels

- jpg: gzipped tar archive of jpeg files for each frame (size 384×288 pixels) ←- huge file size!

Additional Information and Tools

- MATLAB calibration (provided by Jürgen Gall, BIWI, ETH Zürich)

- description of the .bvh file format can be found at http://www.cs.wisc.edu/graphics/Courses/cs-838-1999/Jeff/BVH.html

- The .bvh files can be viewed with the following programs: (We are not affiliated with the authors of any of these and have not tested the programs)

- Blender: File→Import→MotionCapture(*.bvh) [Linux, Windows]

- Matlab: Motion Capture Toolbox [Linux, Windows]

- Bvhacker: http://davedub.co.uk/bvhacker/ [Windows]

- WX Motion Viewer: http://cgg.mff.cuni.cz/~semancik/research/wxmv/index.html [Linux, Windows]